The Challenge

Businesses were spending 3–4 hours daily manually responding to customer reviews across multiple platforms. This led to delayed responses, inconsistent brand voice, and missed opportunities for customer engagement and reputation management.

Key Pain Points Identified

Manual review monitoring across 15+ platforms was time-consuming

Inconsistent brand voice in responses across different team members

Delayed responses led to customer dissatisfaction

No centralized system for review analytics and insights

Difficulty scaling review management as the business grew

Phase 1: Research & Discovery

Duration: 3 weeks

Interviewed customer support and marketing teams to understand review workflows and challenges

Audited review platforms like Google, Facebook, Yelp, and TripAdvisor to identify integration requirements

Analyzed existing response templates for tone consistency and gaps

Reviewed third-party tools used for ORM (Online Reputation Management)

Mapped the current review response journey and highlighted bottlenecks

Phase 2: Strategy & Planning

Duration: 2 weeks

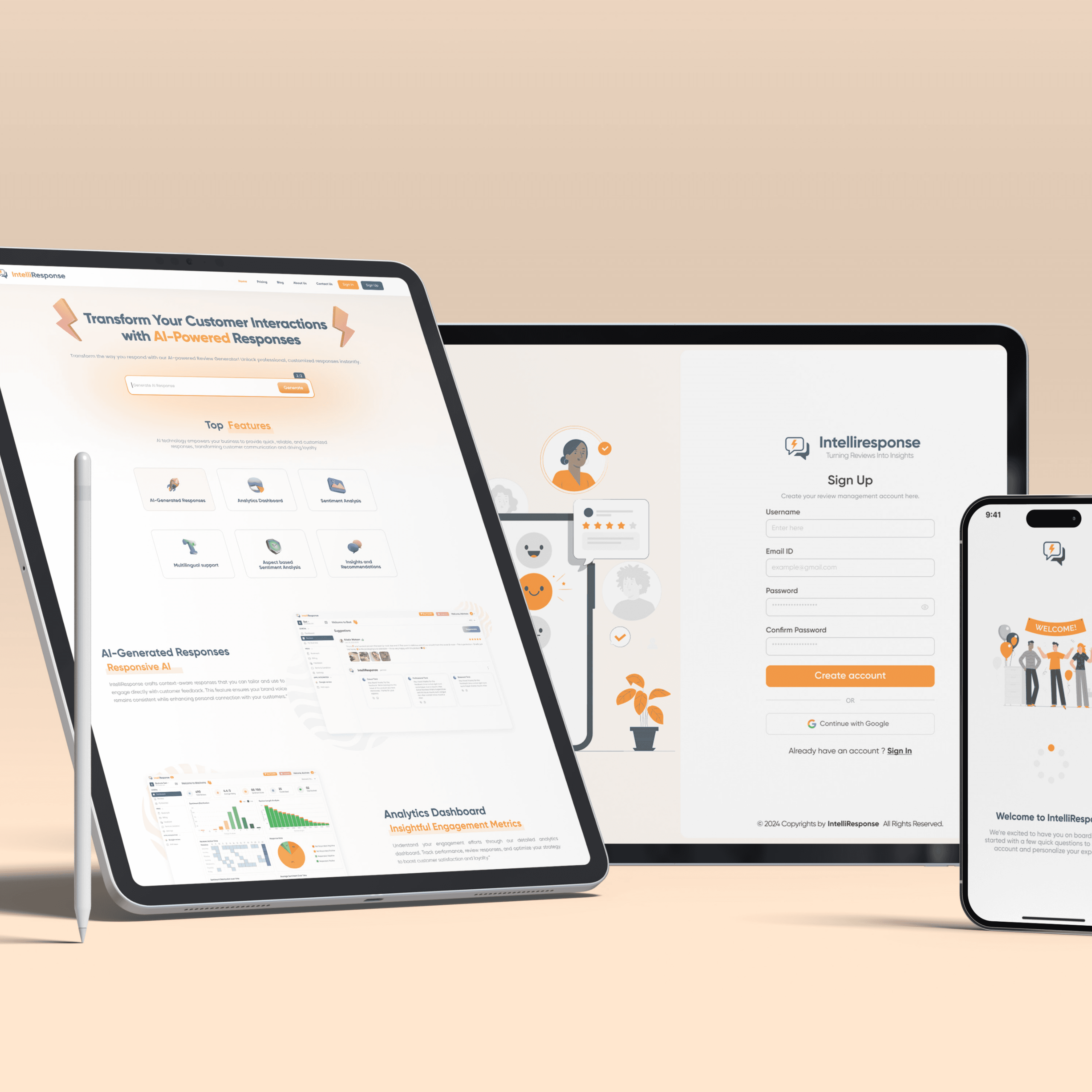

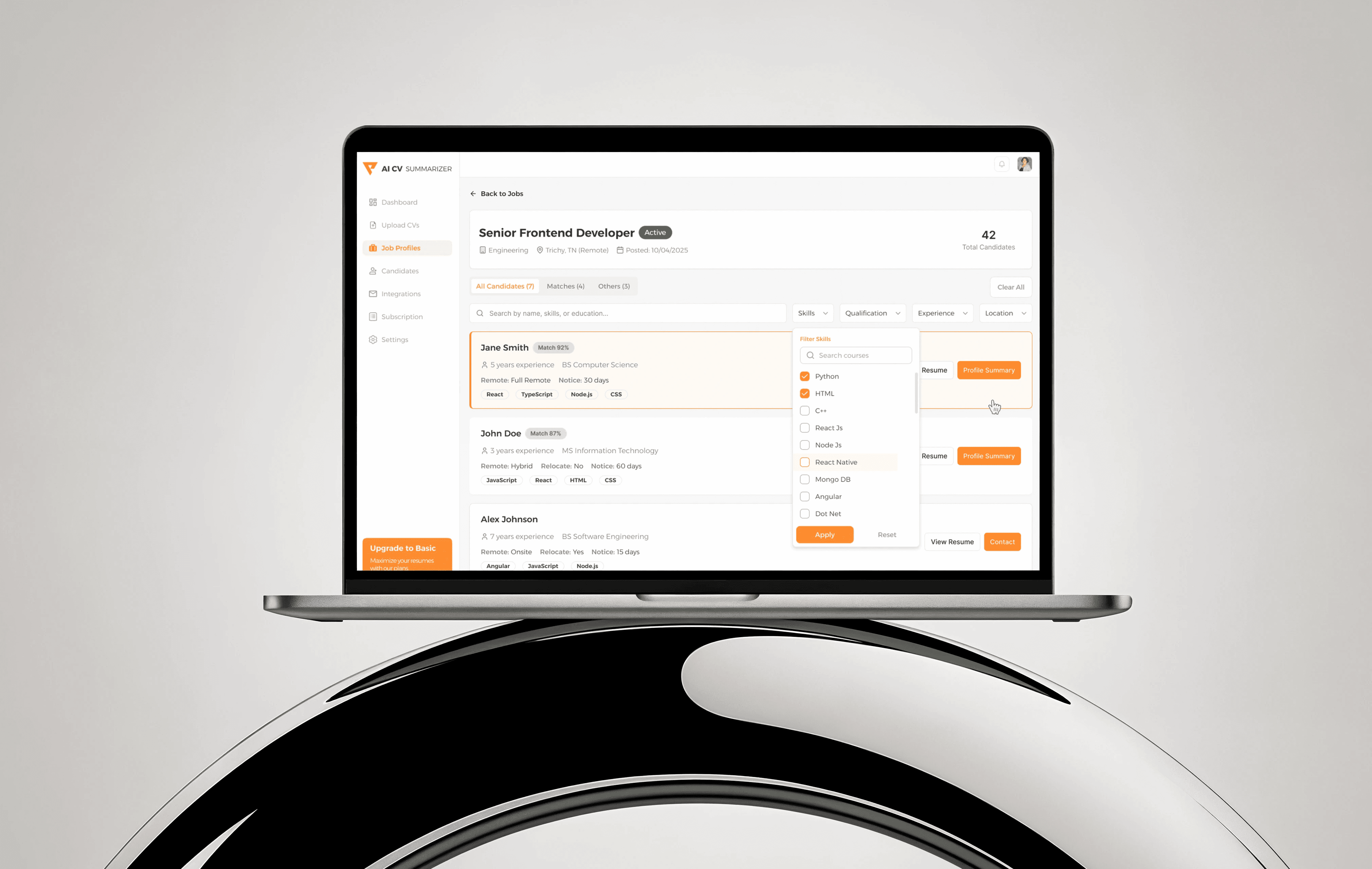

Defined a centralized dashboard architecture to bring all platforms into one unified view

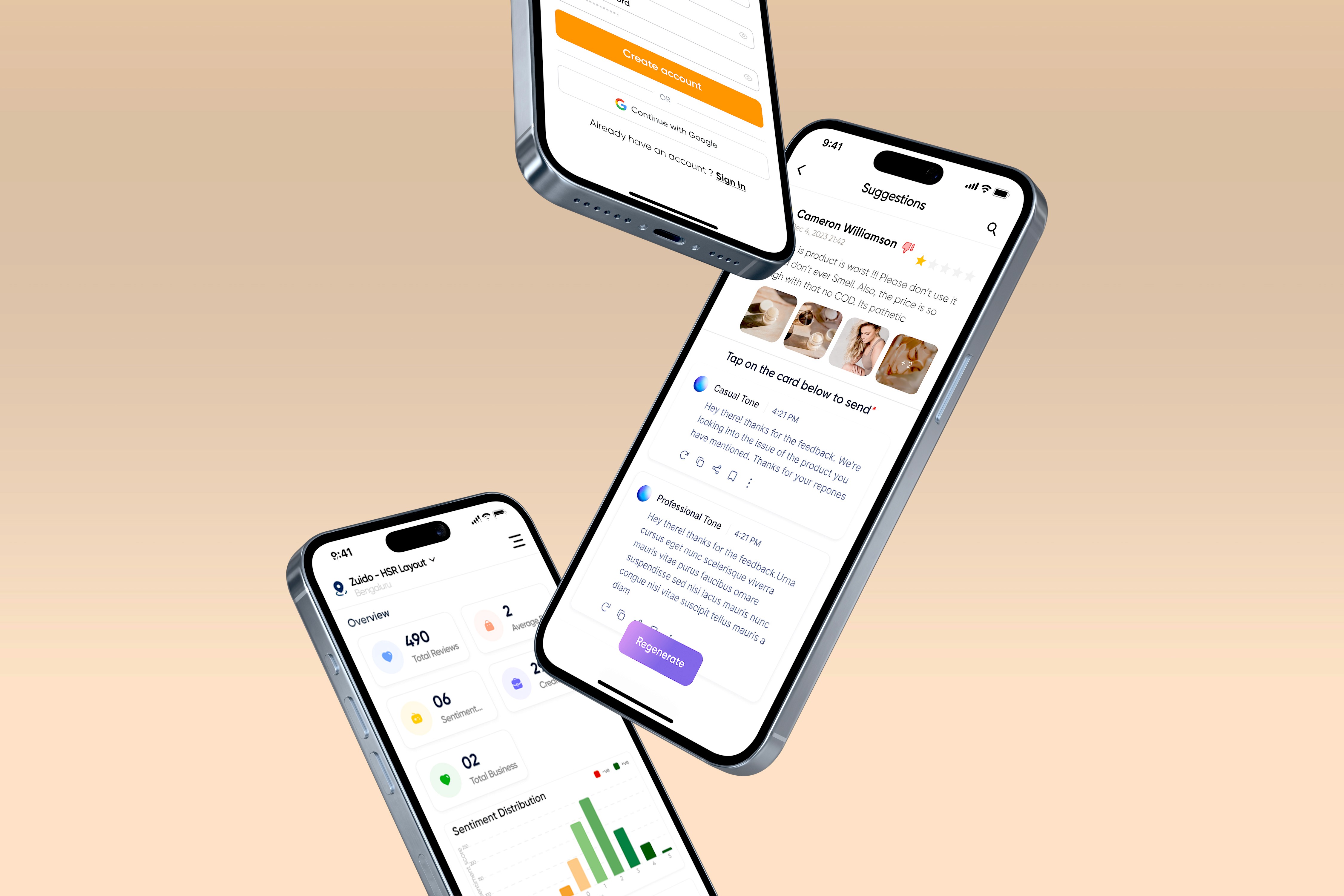

Prioritized features like AI-generated replies, sentiment tagging, and multi-user roles

Created user flows for core actions: monitor, respond, approve, analyze

Set success metrics such as response time reduction, brand voice consistency, and platform engagement.

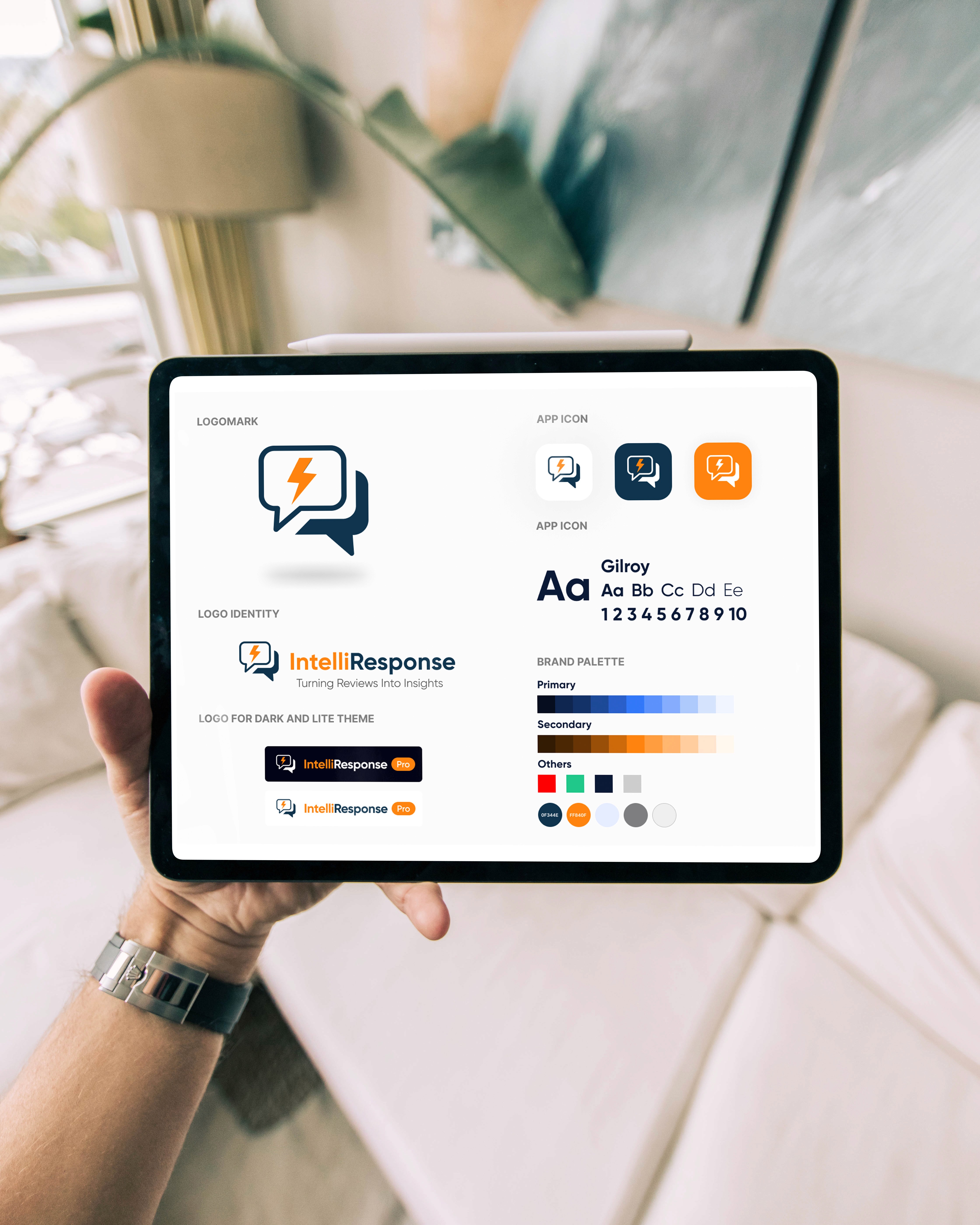

Phase 3: Design & Prototyping

Duration: 5 weeks

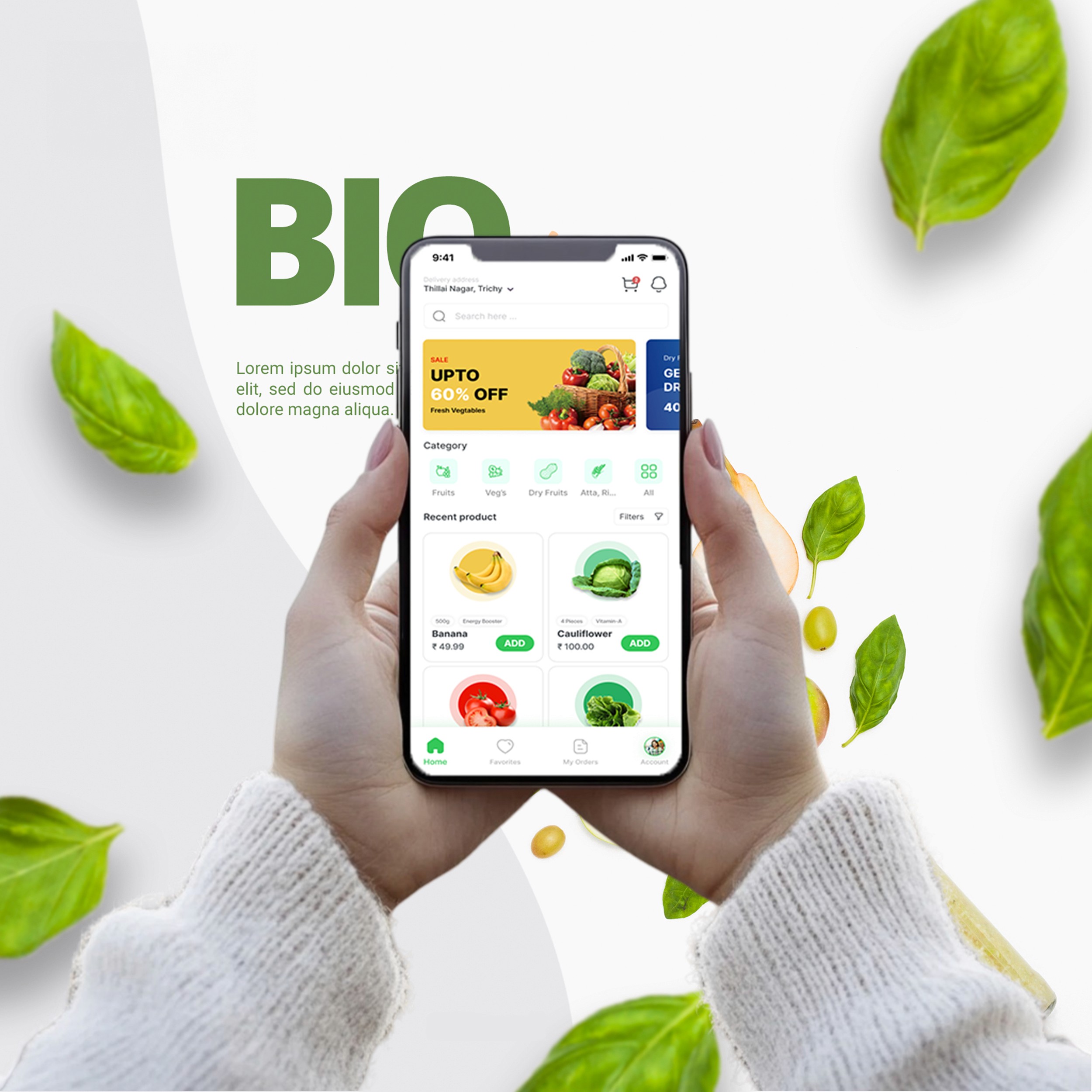

Designed low-fidelity wireframes for dashboards, inbox, review cards, and analytics

Built high-fidelity mockups with a clean, professional UI suitable for enterprise use

Developed clickable prototypes for internal testing and stakeholder walkthroughs

Designed for responsiveness across desktop, tablet, and mobile

Added micro-interactions for AI suggestions, sentiment highlights, and bulk actions

Phase 4: Testing & Iteration

Duration: 3 weeks

Conducted usability testing with 10 customer support professionals

Validated tone-matching of AI-generated replies through A/B testing

Collected feedback from product, marketing, and tech teams

Iterated designs to improve user navigation and system feedback

Phase 5: Implementation Support

Duration: 4 weeks

Worked with developers to ensure integration with external review APIs

Provided specs, components, and icon assets for development

Performed design QA for all key user flows

Supported beta testing and monitored feedback for post-launch enhancements

Key Solutions & Impact

I helped transform the manual review response process by creating a centralized, AI-powered platform. The AI-generated reply system reduced response effort by 45%, while real-time sentiment analysis helped prioritize critical reviews. The unified inbox streamlined multi-platform management, improving operational efficiency. Our consistent tone and approval workflows ensured brand integrity, and the new analytics dashboard empowered teams with actionable insights, increasing engagement by 32%.

Key Learnings from the Project

This project emphasized the value of AI-assisted workflows in reducing operational load. I learned how vital brand tone consistency is across large teams and platforms. Creating an intuitive dashboard UI for non-technical users was a key challenge that taught me to simplify complexity without limiting functionality. Most importantly, I discovered that review management is not just a support task—it’s a powerful engagement and trust-building tool.

Future Enhancements

Moving forward, I plan to introduce multi-language AI responses to support global businesses. Adding a review escalation system for negative sentiment will allow proactive engagement. I also aim to integrate CRM and ticketing system connections for seamless follow-ups. Lastly, developing automated insights and trend detection will help businesses turn reviews into strategic opportunities.